In today’s hyper-competitive environment, businesses are in constant pursuit of innovation to stay ahead. Whether it’s a CPG company introducing new product categories, a retailer optimizing assortments, or a financial services firm entering new market segments—innovation is a strategic imperative across industries like travel, logistics, IT, and electronics.

Innovations span across multiple aspects of business. They can be product improvements, operational changes, marketing methods or sales strategies. These changes are well thought out, taking into account the best understanding of customer expectations that the company has. However, the success of such an innovation is not always guaranteed, due to one or more of the following factors:

Small or unbalanced sample sizes in surveys

Surveys with limited responses can lead to inaccurate conclusions that don’t reflect the broader customer base. For example, drawing insights from just 50–100 participants may not be enough to represent diverse customer preferences.

Poor segmentation in customer feedback

Without proper segmentation, even large samples may misrepresent key customer groups.

Behavior-based segmentation often provides deeper insights than basic demographics, ensuring more meaningful feedback.

External factors affecting A/B testing outcomes

Real-world variables like weather or major events can distort test results, masking the actual impact of innovations. Identifying control groups that share similar external conditions with test groups is critical for accurate comparisons.

Insufficient time to collect enough data before an opportunity passes

In fast-moving domains like online advertising, waiting to collect enough data may result in missed market windows. Quick, data-driven decisions are essential when timing is critical and opportunities are fleeting.

Long development cycles due to labor-intensive testing processes

Thorough testing, especially in software or operations, is time-consuming and can delay product rollouts. Streamlining validation processes without compromising reliability is key to maintaining innovation momentum.

This is where Artificial Intelligence (AI) plays a transformative role— improving various aspects of testing, preventing bias and yielding rigorous measurements. In an increasingly dynamic marketplace, testing without bias is critical. AI equips organizations to test smarter, faster, and more accurately by optimizing everything from sampling to test case selection.

We will now take a look at how AI enables a far more accurate and smarter testing process.

AI in Consumer Behavior Testing

Customer research forms the foundation of many business decisions. However, traditional testing methods often fall short due to sampling bias or operational inefficiencies. AI can address these challenges across various survey and testing formats.

Representative Test Groups with AI

A major challenge in consumer behavior testing is selecting a group that reflects the broader customer base. In-person surveys, often conducted at specific times and locations, can introduce selection bias—for instance, surveying customers at 10 a.m. on weekdays may exclude working professionals. This skews results and limits the value of insights.

To overcome this, businesses can leverage AI to enhance the selection process. By analyzing purchase history, customer profiles, and behavioral patterns, AI models can predict when and where the most representative cross-section of customers is likely to shop. This allows for more strategic deployment of survey efforts—ensuring the data collected is balanced, diverse, and better aligned with the broader market. In doing so, businesses can significantly improve the reliability and relevance of insights derived from customer feedback.

Enhancing Survey Efficiency with AI

Online and mail-in surveys are often preferred over in-person methods for their broader reach. Online surveys are especially cost-effective and easy to scale. Mail-in surveys, however, are more expensive and typically suffer from low response rates—even with incentives like discount coupons. To meet response targets, companies often send mailers to three to five times the desired sample size, driving up costs and reducing efficiency.

AI can play a critical role in optimizing this process. By analyzing historical purchase data and customer behavior patterns, AI models can predict the likelihood of an individual responding to a survey. This allows companies to intelligently prioritize and target those customers who are most likely to engage, thereby reducing the number of mailers needed. Not only does this improve cost efficiency, but it also enhances the quality and representativeness of the feedback collected.

Improved In-Store Testing Accuracy

Evaluating the effectiveness of in-store promotions and price discounts requires careful analysis of customer response at the store level—a task that comes with several complexities. Often, detailed customer profiles and demographic data at individual store locations are not readily available, making it difficult to attribute results to specific customer behaviors. In addition, external variables such as local events, weather conditions, or holidays can significantly influence store traffic and sales, potentially distorting the true impact of the promotion being tested.

To address this, businesses often rely on A/B testing methodologies, where a test store is compared against a carefully selected control store. The control store is chosen to match the test store as closely as possible in terms of customer demographics, purchasing patterns, and external conditions during the test period. This approach helps isolate the effect of the promotion itself by minimizing the influence of outside factors, thereby enabling more accurate measurement and more confident decision-making.

Optimizing Digital Advertising with AI-Driven Testing

Digital advertising operates in a fast-paced environment where timing is critical and Click Through Rates (CTR) are typically low. Advertisers often test multiple messaging variations to identify the most effective one, especially when promoting time-sensitive events. However, in digital channels like search and shopping ads, the audience profile is often unknown in advance, making it difficult to target specific customer segments.

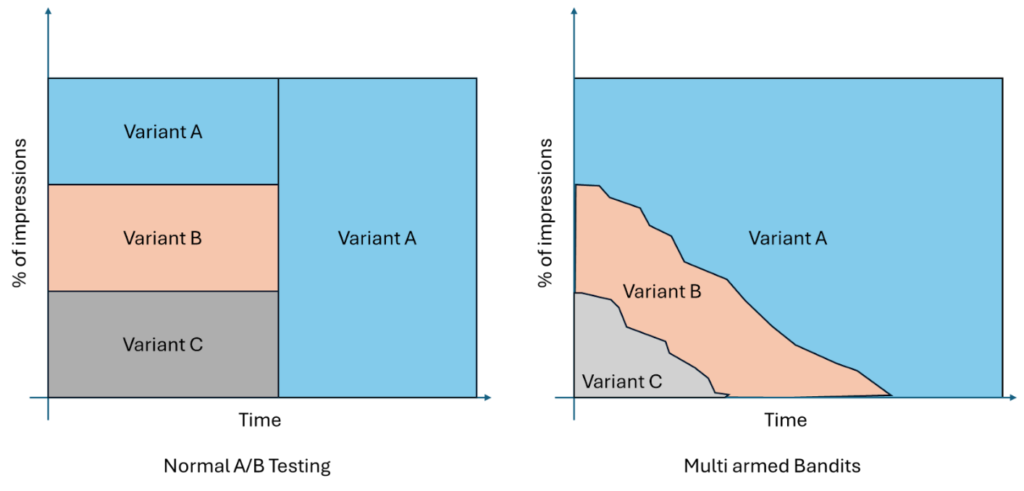

Traditional A/B testing methods, which require waiting for statistically significant sample sizes for each variant, can be too slow for these dynamic campaigns. This is where AI-driven approaches like Multi-Armed Bandits (MAB) offer a powerful advantage. MAB algorithms continuously test and evaluate multiple ad variants in real time, dynamically allocating more impressions to higher-performing options as new data comes in. This adaptive approach not only accelerates decision-making but also boosts overall campaign performance by maximizing exposure to the best-performing ads.

By learning from each customer interaction, MAB methods refine their estimates and quickly converge on the most effective message, ensuring that time-sensitive opportunities are not lost to long testing cycles. The result is a more efficient use of ad spend and higher engagement across the campaign period.

The Evolving Landscape of Product Testing and the Role of AI

Product testing varies significantly across industries, shaped by the nature of the product and the complexity of its development process. For commercial goods such as consumer packaged goods (CPG) and white goods, testing is typically carried out in controlled lab environments before mass production. These tests are well-defined, limited in scope, and usually completed in a relatively short time frame. The parameters for assessing product quality are clear, and the environment is stable, making the testing process straightforward.

However, in domains like electronic chip design and software development, testing becomes far more complex and continuous. These products are created using digital tools and object-oriented programming methods that enable distributed development across multiple teams. As these teams build different components, the overall product becomes too intricate for simple validation methods. Specialized testing teams are brought in to verify that each iteration of the design behaves as expected. To ensure objectivity, these teams often treat the design as a “black box,” testing outputs without access to the underlying code.

In these high-tech environments, testing revolves around two critical metrics:

- Line coverage, which verifies that every line of executable code is tested. Any line not covered may harbor undetected bugs.

- Functional coverage, which checks if the system performs the required tasks correctly. For instance, a calculator must handle operations like addition or division—including edge cases like division by zero—with consistent accuracy.

Testing is especially rigorous in chip design because any bug post-production is extremely costly to fix—requiring hardware recalls or redesigns. In contrast, software bugs, while still serious, can typically be resolved through patches and updates.

Due to this complexity, test cycles are time-consuming. Developers often build on legacy code, leaving unused or redundant segments intact. Testing teams, using high-level design descriptions and prior experience, create large test suites to ensure comprehensive coverage. This makes each test cycle lengthy, and iterative development methods—where design and testing happen in multiple rounds—further add to the total time investment.

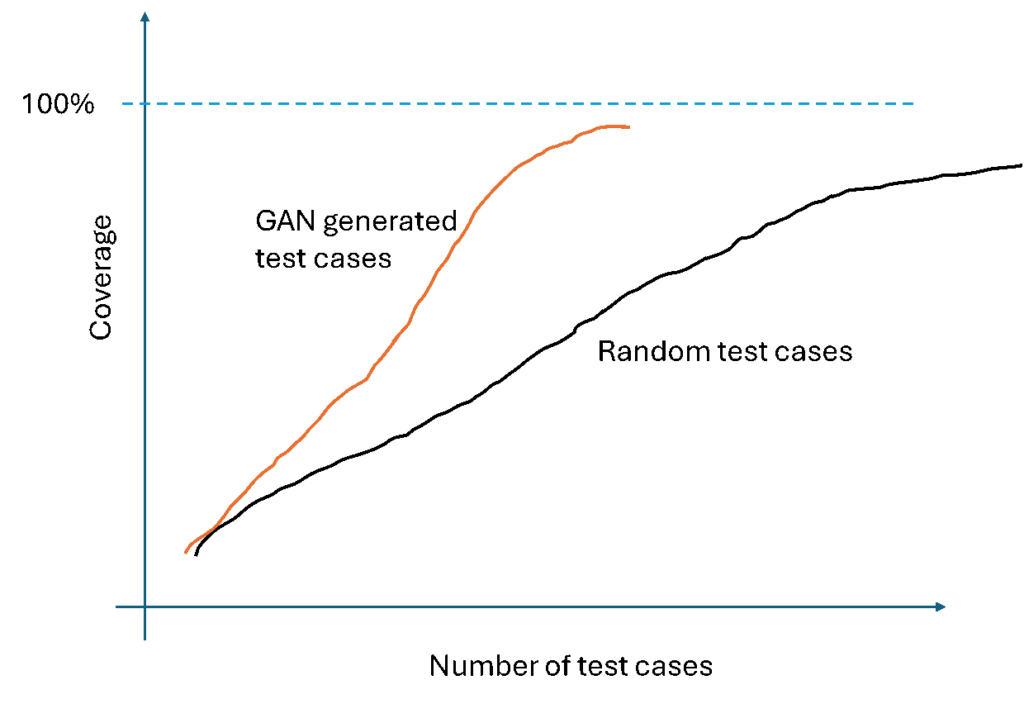

AI models can significantly reduce the testing load by identifying redundant or low-value test cases early in the process. Techniques such as Generative Adversarial Networks (GANs) are especially effective at generating test cases that are more likely to improve coverage, as they can mimic and prioritize under-tested scenarios more intelligently than random methods.

Graph Neural Networks (GNNs) are another powerful AI tool transforming product testing. GNNs can generate rich, structured representations of code and design logic. For mature or near-complete designs, GNNs can predict the potential code coverage achievable with a given set of test cases. This predictive capability is much faster than executing full test runs. With this information, testing teams can pre-select an optimized set of test cases that are likely to deliver the desired coverage, significantly accelerating the validation phase.

Additionally, Reinforcement Learning and Transformer-based models operating on tabular or structured test data have also shown promise in optimizing test strategies and prioritizing impactful test paths.

By integrating these AI techniques into the testing process, organizations can reduce time-to-market, lower testing costs, and maintain or even improve product reliability. AI doesn’t just speed up testing—it makes it smarter, more targeted, and better aligned with the complexity of modern product development.

Future Directions with GenAI & LLMs

As Large Language Models (LLMs) and Generative AI (GenAI) increasingly contribute to code generation, the nature of software development is rapidly evolving. These tools can produce functional code quickly by drawing on vast amounts of training data, often offering popular or commonly accepted solutions. However, subtle bugs or logic mismatches—arising from differences between actual requirements and AI-generated code—can be difficult to detect through traditional testing methods.

In this new landscape, robust and intelligent testing is more critical than ever. AI-driven testing frameworks not only accelerate the validation process but also help uncover hidden biases and logic errors that may go unnoticed in standard test cycles. As AI becomes a more integral part of both code creation and testing, its dual role ensures a more resilient, efficient, and reliable development pipeline.